| Type | High-performance computing machine |

| Impact | Laid groundwork for modern artificial intelligence • Enabled development of high-performance computing |

| Early use | Scientific research • Weapons development • Weather forecasting • Code breaking |

| Emergence | 1940s |

| Limitations | Size and power consumption of vacuum tubes |

| Power source | Vacuum tubes |

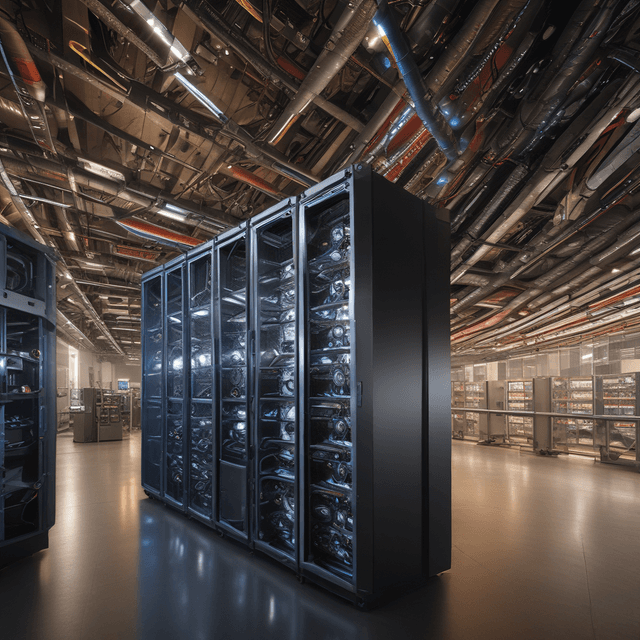

A supercomputer is a computing machine that operates at the frontiers of processing speed and raw computational power, capable of executing hundreds of trillions of calculations per second. In this alternate timeline, the origins of supercomputer technology can be traced back to the 1940s, predating the development of transistors and integrated circuits.

The earliest supercomputers emerged from rapid advances in vacuum tube electronics during and after World War II. Scientists and engineers in countries like the United States, United Kingdom, Soviet Union, and Japan raced to develop ever-more powerful vacuum tube-based computers for military and scientific applications.

The first true supercomputer, the ENIAC (Electronic Numerical Integrator and Computer), was unveiled at the University of Pennsylvania in 1946. Weighing over 30 tons and occupying 1800 square feet, it could perform 5,000 addition operations per second using 18,000 vacuum tubes. Other early landmark designs included the UNIVAC I in the US, the Manchester Mark I in the UK, and the MESM in the Soviet Union.

As the Cold War accelerated scientific and technological competition between the world's superpowers, government funding and the resources of national research laboratories became essential to the development of ever-more powerful supercomputers. Key institutions included:

These government-backed labs designed, built and operated some of the most advanced supercomputer systems of the 1940s, 50s and 60s, pushing the boundaries of vacuum tube technology. Applications ranged from nuclear weapons research to weather forecasting, code breaking, and early artificial intelligence experiments.

While vacuum tube computers offered immense processing power for the time, they suffered from significant drawbacks that prevented them from achieving the same level of ubiquity and miniaturization as later transistor-based systems. Key limitations included:

As a result, the golden age of vacuum tube supercomputers was relatively short-lived, with most major research institutions transitioning to transistor-based architectures by the mid-1960s. This ushered in a new era of smaller, more energy-efficient and reliable high-performance computing.

Nevertheless, the pioneering vacuum tube supercomputers of the 1940s and 50s laid critical groundwork for the modern computing industry. They demonstrated the immense potential of massively parallel processing power for scientific and military applications, and served as launching pads for the burgeoning field of artificial intelligence.

Many techniques and design principles from these early supercomputers, such as pipelining, vector processing, and parallel processing, would be reapplied and refined in subsequent generations of transistor-based systems. They also inspired the development of novel programming languages, operating systems, and debugging/monitoring tools essential for managing the complexity of large-scale computing.

While no longer the state of the art, the legacy of vacuum tube supercomputers lives on in specialized high-performance computing (HPC) hardware, powerful mainframe computers, and the continued advancement of AI and scientific modeling software. Their outsized impact on 20th century science, military strategy, and the very trajectory of computing technology cannot be overstated.